Recently, I made a disparaging comment about data that was not statistically signficant but rather was “trending” toward significance:

There was an apparent “trend” towards fewer cases of CP and less developmental problems. “Trends” are code for “We didn’t find anything but surely it’s not all a waste of time.”

This comment was in reference to the ACTOMgSO4 trial which studied prevention of cerebral palsy using magnesium sulfate. This study is often cited in support of this increasingly common but not evidence-based practice. To be clear, ACTOMgSO4 found the following: total pediatric mortality, cerebral palsy in survivors, and the combined outcome of death plus cerebral palsy were not statistically significantly different in the treatment group versus the placebo group.

Yet, this study is quoted time and time again as evidence supporting the practice of antenatal magnesium. The primary author of the study, Crowther, went on to write one of the most influential meta-analyses on the issue, which used the non-significant subset data from the BEAM Trial to re-envision the non-significant data from the ACTOMgSO4 Trial. Indeed, Rouse, author of the BEAM Trial study, was a co-author of this meta-analysis. If this seems like a conflict of interest, it is. But there is only so much that can be done to try to make a silk purse out of a sow’s ear. These authors keeps claiming that the “trend” is significant (even though the data is not).

Keep in mind that all non-significant data has a “trend,” but the bottom line is it isn’t significant. Any data that is not exactly the same as the comparison data must by definition “trend” away. It means nothing. Imagine that I do a study with 50 people in each arm: in the intervention arm 21 people get better while in the placebo arm only 19 get better. My data is not significantly different. But I really, really believe in my hypothesis, and even though I said before I analyzed my data that a p value of < 0.05 would be used to determine statistical significance, I still would like to get my study published and provide support to my pet idea; so I make one or more of the following “true” conclusions:

- “More patients in the treatment group got better than in the placebo group.”

- “There was a trend towards statistical significance.”

- “We observed no harms from the intervention.”

- “The intervention may lead to better outcomes.”

All of those statements are true, or are at least half-truths. So is this one:

- “The intervention was no better than placebo at treating the disease. There is no evidence that patients benefited from the intervention.”

How did the authors of ACTOMgSO4 try to make a silk purse out of a sow’s ear? They said,

Total pediatric mortality, cerebral palsy in survivors, and combined death or cerebral palsy were less frequent for infants exposed to magnesium sulfate, but none of the differences were statistically significant.

That’s a really, really important ‘but’ at the end of that sentence. Their overall conclusions:

Magnesium sulfate given to women immediately before very preterm birth may improve important pediatric outcomes. No serious harmful effects were seen.

Sounds familiar? It’s definitely a bit of doublespeak. It “may improve” outcomes and it may not. Why write it this way? Bias. The authors knew what they wanted to prove when designing the study, and despite every attempt to do so, they just couldn’t massage the data enough to make a significant p value. Readers are often confused by the careful use of the word ‘may’ in articles; if positive, affirmative data were discovered, the word ‘may’ would be omitted. Consider the non-conditional language in this study’s conclusion:

Although one-step screening was associated with more patients being treated for gestational diabetes, it was not associated with a decrease in large-for-gestational-age or macrosomic neonates but was associated with an increased rate of primary cesarean delivery.

No ifs, ands, or mays about it. But half-truth writing allows others authors to still claim some value in their work while not technically lying. But it is misleading, whether intentionally or unintentionally. Furthermore, it is unnecessary – there is value in the work. The value of the ACTOMgSO4 study was showing that magnesium was not better than placebo in preventing cerebral palsy; but that’s not the outcome the authors were expecting to find – thus the sophistry.

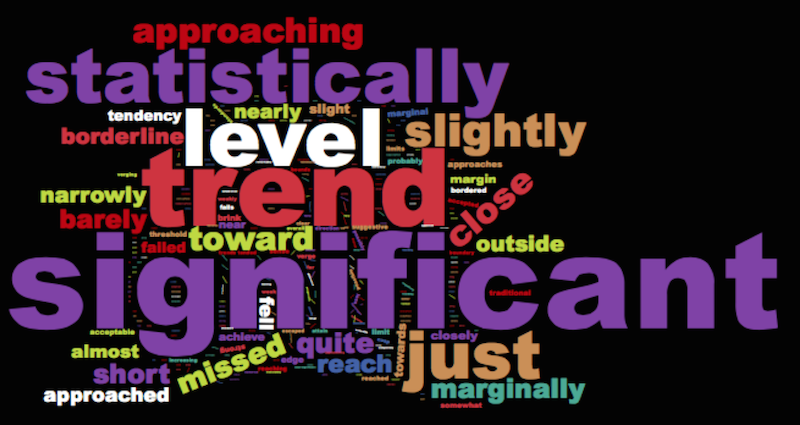

The Probable Error blog has compiled an amazing list of over 500 terms that authors have used to described non-significant p values. Take a glance here; it’s truly extraordinary. The list includes gems like significant tendency (p = o.o9), possibly marginally significant (p = 0.116), not totally significant (p = 0.09), an apparent trend (p = 0.286), not significant in the narrow sense of the word (p = 0.29), and about 500 other Orwellian ways of saying the same thing: NOT SIGNIFICANT.

I certainly won’t pretend that p values are everything; I have made that abundantly clear. We certainly do need to focus on issues like numbers needed to benefit or harm. But we also need to make sure that those numbers are not derived from random chance. We need to use Bayesian inference to decide how probable or improbable a finding actually is. But the culture among scientific authors has crossed over to the absurd, as shown in the list of silly rationalizations noted above. If the designers of a study don’t care about the p value, then don’t publish it; but if they do, then respect it and don’t try to minimize the fact that the study did not disprove the null hypothesis. This type of intellectual dishonesty partly drives the p-hacking and manipulation that is so prevalent today.

If differences between groups in a study are truly important, we should be able to demonstrate differences without relying on faulty and misleading statistical analysis. Such misleading statements would not be allowed in a court of law. In fact, in this court decision which excluded the testimony of the epidemiologist Anick Bérard, who claimed that Zoloft caused birth defects, the Judge stated,

Dr. Bérard testified that, in her view, statistical significance is certainly important within a study, but when drawing conclusions from multiple studies, it is acceptable scientific practice to look at trends across studies, even when the findings are not statistically significant. In support of this proposition, she cited a single source, a textbook by epidemiologist Kenneth Rothman, and testified to an “evolution of the thinking of the importance of statistical significance.” Epidemiology is not a novel form of scientific expertise. However, Dr. Bérard’s reliance on trends in non-statistically significant data to draw conclusions about teratogenicity, rather than on replicated statistically significant findings, is a novel methodology.

These same statistical methods were used in the meta-analyses of magnesium to prevent CP, by combining the non-statistically significant findings of the ACTOMgSO4 study and the PreMAG studies with the findings from the BEAM trial; but, ultimately, not significant means not significant, no matter how it’s twisted.