How did our profession get so far down the wrong track regarding magnesium? Why is it today that most women in preterm labor are still exposed to magnesium sulfate, either as a tocolytic or to prevent cerebral palsy or both? If you have read the three part series on magnesium, you’re either asking yourself these questions or you have convinced yourself that I have not provided some part of the data or fully explained the issues. It is hard to believe that we all, like those lemmings, have marched off the wrong cliff. If you think that something is missing in my presentation, please send it to me as soon as you find it.

How did our profession get so far down the wrong track regarding magnesium? Why is it today that most women in preterm labor are still exposed to magnesium sulfate, either as a tocolytic or to prevent cerebral palsy or both? If you have read the three part series on magnesium, you’re either asking yourself these questions or you have convinced yourself that I have not provided some part of the data or fully explained the issues. It is hard to believe that we all, like those lemmings, have marched off the wrong cliff. If you think that something is missing in my presentation, please send it to me as soon as you find it.

About 10 years ago, I presented an exhaustive one hour presentation on the data regarding magnesium as a tocolytic. The audience, at an ACOG conference, was similarly forced to make a decision by the overwhelming nature of the data presented. Several older physicians came up to me and said, “I’m just sure there was another paper you missed that said that mag works for 48 hours.” Of course, there wasn’t; but that idea had been so often repeated that these physicians assumed it must have a source. Some told me of the Canadian Ritodrine Study and how it found that tocolytics, or at least ritodrine, was shown to be effective versus placebo at preventing delivery within 48 hours, allowing time to administer steroids. The study did not find that, but I was able to find where the study had been misquoted to say so in a review article, and then the review article that made the mistake was used as a source of other articles. Our cognitive biases lead us to stop asking questions once we find what appears to be support for our practices or beliefs. This is called search satisfying or confirmation bias.

On another occasion, a medical student (who today is a very wonderful OB/GYN) challenged my assertion that magnesium was an ineffective tocolytic by bringing me a summary of a then recent meta-analysis of the available placebo-controlled, randomized trials, which concluded that “magnesium was an effective tocolytic agent.” The short summary, printed in the ACOG Clinical Review newsletter, should have read “magnesium was not an effective tocolytic agent.” Once we downloaded the paper that had been referred to in the review, the typographical mistake was obvious and I was redeemed in his eyes. How did this even happen? I am sure that the editor of the newsletter was so accustomed to using magnesium as a tocolytic that the typo didn’t even register as such in his head. Nor did this seem unusual to the student or many thousands of other readers since they too were used to seeing magnesium used as a tocolytic on a daily basis.

I, on the other hand, lucked out because I had read most of the sixteen or so trials that were reviewed in the meta-analysis, so I knew that something didn’t quite make sense. This was the stimulus to check the primary source.

Lesson #1: Always check the primary sources.

Honest mistakes happen and dishonest mistakes happen. Don’t be lead astray by either. Most of the literature is regurgitation of older literature through commentaries, reviews, and meta-analyses. Textbook authorship is similarly biased. Most texts start with a review of what the author or authors actually do in practice, then a literature search is assembled to provide footnotes for each of these points to validate the protocol. Since a literature search can provide positive support for almost any practice, including suboccipital trephining, then this common authorship practice is dependent upon the author being “right” in the first place. So always check the primary sources, and what’s more, do an exhaustive literature review to see if that primary source is an outlier or if it is consistent with what we have generally found about the subject. Today, suboccipital trephining is the outlier, but if you were willing to rely on just a few poorly designed papers from the past, I can convince you that it is the right thing to do.

Lesson #2: Always consider the full body of evidence.

Scientific consensus is both a good and a bad thing. It is a good thing when it is based upon a consensus of well-designed, validated, and replicated scientific studies. It is a bad thing when it merely represents common practice or popular opinion. The literature-based scientific consensus has always been that magnesium is an ineffective tocolytic and it does not reduce the risk of cerebral palsy in surviving low birth weight infants. But the popular opinion-based scientific consensus has varied widely on both issues throughout the years.

Popular opinion and common practice often are not rooted in science. David Grimes, in the previously mentioned Green Journal editorial, said that magnesium tocolysis “reflects inadequate progress toward rational therapeutics in obstetrics.” That was a kind way of saying that its popular and widespread use was irrational given the scientific literature available on the subject. History has taught us that most widely held scientific beliefs are eventually overturned in the generations to come. Each generation who believed things like the geocentric theory of planetary motion did so based on what they considered to be highly rational evidence; the average person, even the average scientist, believed it because someone whom they assumed was smarter than them told them that it was true. We make the same mistakes today.

Lesson #3: Real scientific consensus is based on the agreement of well-designed, validated, and replicated scientific studies, not opinions or common practice

We far too often defer to those powerful personalities whom we assume to be experts in their areas of interest. Certainly, a person who has spent his whole career, or at least a large portion of it, researching and studying one particular area of science should be and is given a forum for expressing what they have learned. Nevertheless, their opinions and ideas should not be given carte blanche. How many of Sigmund Freud’s absurd and ridiculous theories do we still believe today? Why was he ever allowed to opine, for example, that cerebral palsy was caused by Obstetricians?

Since personal bias and the pressures and demands of career-dependent scientific investigation are such large motivators for things like scientific fraud, P-hacking, biased design of studies, and self-promoting interpretation of data, then often those who should have the most to offer are also those who have the most reason and motivation to be consciously or unconsciously dishonest about the available data. This is unconscious dishonesty is what we call cognitive bias. Even though the average practicing obstetrician may not have a particular set of cognitive biases that would lead her to misinterpret a particular set of data, if she chooses to endow with too much authority the opinions of certain so-called “thought leaders,” then she takes on defaults and the faulty conclusions of those cognitive biases. Sometimes the Emperor has no clothes, and the last person to realize this is the Emperor.

Lesson #4: Don’t overly rely upon the opinion of experts, particularly when those experts have a career at stake based upon the promulgation of their pet theories.

Far too often, what should be a strength (deep immersion in a narrow field of knowledge) becomes a weakness (a lack of context and dogmatic beliefs that activate cognitive biases). This is what is meant by the expression, He can’t see the forest for the trees. Stop assuming that an expert on a single oak tree is at the same time an expert on the entire biodiversity of the forest. Not only does he sometimes lose sight of how the whole forest must work together, but often times he will be so passionate about preserving the one oak tree that he will destroy the forest.

Subspecialists sometimes value too much their areas of interest; and an outcome or value that they may hold as important may not be as important to others who have a stake. Everything we do has both potential risks and potential benefits. Balancing these risks, while respecting the values of our patients, is the art of clinical medicine. Much harm and error is brought about when we over-value certain benefits and under-appreciate certain risks. The blinders worn by those who are too deep in the forest is often the cause for this form of cognitive bias.

Who should best make our guideline for screening for cervical cancer, a general gynecologist or a gynecologic oncologist? Perhaps the correct answer is both. But the gynecologic oncologist, having too often seen the horror stories of undiagnosed and advanced cervical cancer, and having perhaps never seen the harms afforded by false positive diagnoses and overtreatment of cancer precursors, is too biased to go it alone in designing such a guideline. Infants in the BEAM Trial were over 4 times as likely to die as they were to survive with moderate or severe cerebral palsy. The larger number of perinatal deaths can easily absorb an excess 10 or so deaths without appearing to see statistical significance; at the same time, a similar change in the number of survivors with cerebral palsy will make that statistical category appear to change in a statistically significant way. If the only thing you care about is decreasing the number of survivors with cerebral palsy, then given enough attempts you will be able to design a study that makes this appear to be true. But we have to consider all outcomes, and we have to appreciate the absolute risk reduction or risk increase associated with our decisions.

Lesson #5: Don’t myopically consider just one outcome or even a handful of outcomes; consider all outcomes, including unexpected and unintended consequences.

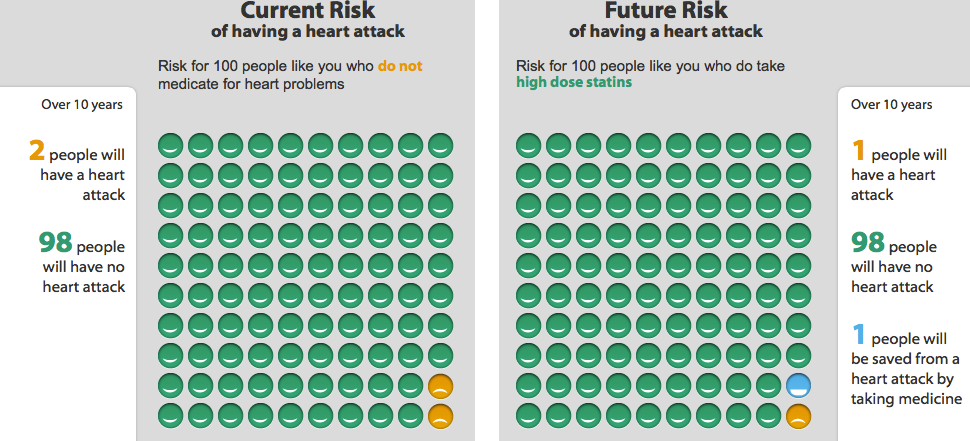

While routine mammography in the sixth decade of life decreases the risk of death from breast cancer by 20%, it does not decrease the risk of death from cancer. Be careful to choose the outcomes that matter. I recently saw a patient who was taking a statin drug for elevated cholesterol. She was an otherwise healthy woman with no cardiovascular risk factors. We used a risk and benefits calculation tool from the Mayo Clinic website to determine the real value of her continuing to take this drug for the next 10 years.

It turns out that if she takes the drug every day for the next 10 years, given her other risk factors and health indicators, she would reduce her risk of having a nonfatal myocardial infarction by one percentage point in the next decade. She had been having some side effects with the medication and her primary care physician was adamant that she not stop it. She, of course, had no idea that the continuous and daily usage of this medication afforded her so little benefit over 10 years.

The particular drug, by the way, costs $270 per month (Crestor). Invested at a 6% interest rate, this represents lost savings of $45,268.11 over that same ten year period. Since there was only a one percentage point reduction in her risk of a nonfatal MI, then a total of $4,526,811 would be spent to prevent one nonfatal MI. Yes, that’s right, just 100 patients! – the typical primary care doctor probably has a 1000 patients like this his practice. That $45M over a ten year period per primary care doctor is why medical care costs so much in the US and why we get free lunches from drug reps.

If all you care about is reducing the risk of heart attacks, then you will advocate for using this drug. That single nonfatal MI is your oak tree. While the statement, “Taking this medicine will reduce your risk of a heart attack” is true, it really does not tell the whole story. We have to appreciate how much risk reduction occurs and at what cost. Most patients would rather have the money spent on that drug to help their children or grandchildren get an education than to reduce their risk of a nonfatal MI by one percentage point. For the cost of preventing one nonfatal MI, we could’ve paid for 150,000 flu shots (at $30 per shot), preventing about 4,500 cases of the flu, some of which would have been fatal.

Lesson #6: Express the real risks and benefits of what we do in absolute terms, so that both we and the patients truly understand them.

Of course, this last point assumes that there are any statistically significant benefits from treating preterm labor with magnesium sulfate; there are not. In the case of performing routine mammography on low risk women or using statin medications for isolated hyperlipidemia, there are statistically significant potential benefits; yet, we must frame them and explain them to our patients in terms that realistically express the potential benefits, not paternalistically invoke our will upon the patients.

Clear benefits are easy to see in studies and they are easy to explain to patients. We may argue about how valuable a flu shot is to certain populations, but it is clear that flu shots are relatively low risk and prevent many people from succumbing to the flu. I don’t need to P-hack the data or perform a meta-analysis of nonsignificant studies to try to make this point. This is all the more important when there are multiple explanations for a given set of data or multiple confounders that influence the data. Studies of neurological outcomes of very low birth weight babies are confounded by literally hundreds of variables. Beyond obvious variables like gestational age at time of delivery, race, gender, circumstances leading up to early delivery, health status of the mother, mode of delivery, completion of antenatal steroids, and other similar factors, there are hundreds of other influential factors that we cannot account for easily, like location of delivery, genetics of the parents, quality of care provided by staff who were on call that day, quality of care in the NICU, variation in local interpretation of diagnostic parameters for things like head ultrasounds or neurodevelopmental assessment tools, etc.

It is probable that even things like time of day of delivery (perhaps a measure of the readiness of the in NICU staff) has more to do with subsequent neurodevelopmental outcomes of very low birth weight babies than does the mother receiving 4 g of magnesium sulfate, only a small fraction of which even crossed over to affect the fetus in the first place. Dare I call this common sense? But since neither the initial data of the PreMAG study nor the school-age follow-up data showed any statistically significant differences in the children exposed to 4 g magnesium versus the children who were not exposed, then this is more than just common sense, it is supported by scientific data.

Lesson #7: Real outcomes are really obvious in studies, and they are not dependent upon controversial statistical methodologies or P-hacking.

Cigarette smoking clearly is related to adverse outcomes, even though the father of Frequentist statistics tried his best to prove otherwise. This fact is not difficult to show and it is demonstrated time and time again in a multitude of studies conducted in a multitude of ways with a multitude of outcomes. It’s a real outcome and it is really obvious.

Still, the biggest reason why magnesium has and continues to be a source of silliness in obstetrics is because we physicians refuse to think critically and scientifically. We should always first seek to disprove any hypothesis or theory. If a scientific study, designed well and honestly executed, were to show some benefit to children of maternal exposure to magnesium sulfate, despite the investigators’ very best efforts to disprove such an unnatural assumption, then we might be onto something.

On the other hand, when the name of the trial is ‘Beneficial Effects of Antenatal Magnesium,’ we are hard-pressed to question how diligently the authors worked to disprove this hypothesis. Even if the data suggested some potential benefit, this does not mean that the alternative hypothesis is true. In other words, if the null hypothesis were that antenatal magnesium provided no neurological benefits to children, then finding data that suggests that the null hypothesis is not true does not instead mean that the alternative hypothesis is true. This is one of the great faults of the producers of scientific literature; they often draw conclusions from the data that the data itself does not actually support. What other alternative explanations are also compatible with the data? Usually there are many.

Lesson #8: Always seek to disprove your hypothesis or theory, and always consider alternative hypotheses that fit the data.

Too often today in arguments, we hear appeals to Science, as if science were positioned to prove (or disprove) anything. When you hear people say things like, “That study disproved that theory,” or, “Science has proven that…,” you are hearing an abuse of what science is and what it offers to our knowledge. Similarly, when you hear people invoke the “Scientific consensus,” or when you hear people make statements that carry a degree of certitude about anything, like, “We know such and such based on such and such,” you may be hearing people who have fallen prey to the cult of scientism. This type of reductionist philosophy oversimplifies very complex problems and claims powers for science that science itself does not claim. Science is not capable of determining all truth and knowledge. It is, however, able to conclude that some things are more or less likely than others. We do not prove or disprove anything. We simply know that some ideas or explanations are more probable than others. This probabilistic worldview is a corollary of Bayesian reasoning or plausible inference. It embraces uncertainty and rejects dogma. It favors complexity and rejects reductionism.

Lesson #9: Approach every question critically; seek to disprove hypotheses; embrace uncertainty; consider how likely a thing is based on all available evidence.