In Part 1, we learned about magnesium’s role in treating and preventing eclampsia. In Part 2, we discussed the mistakes made in using it as a tocolytic; its efficacy as a tocolytic was thoroughly refuted by the early 2000s. By 2008, no credible physician could justify the use of magnesium sulfate for the treatment of preterm labor with anything more than anecdotal stories. This did not, however, mean that obstetricians stopped using it. To the contrary: while many younger docs began using nifedipine or indomethacin as a substitute, the older physicians dug in deeper in their intransigent support of magnesium as a tocolytic. But the literature was not only stating that magnesium didn’t help, it was suggesting that it was, in fact, killing babies. This line of attack was particularly painful to its zealots.

The side effect and safety profile of magnesium had always been problematic. It was utilized in humans long before any safety studies were available. While its side effect profile was clearly superior to ethanol, it was still associated with side effects ranging from nausea and vomiting to pulmonary edema, urinary retention, respiratory depression and maternal death.

But neonatal risks were largely undetermined. Recall that one of the first prospective trials of magnesium as a tocolytic, performed by Cox et al. at Parkland (1990), found 8 fetal or pediatric deaths in the magnesium group compared to only 2 in the placebo group. This was an alarming finding, particularly since magnesium was also found to be ineffective at arresting labor.

Still, many of the folks who have been ardent supporters of magnesium tend to discount randomized, placebo controlled trials. But magnesium supporters were thrilled with a 1995 study by Nelson and Grether. The authors did a retrospective, case-control study in California of 155,636 children followed to age 3. They found that 7% of very low birth weight (VLBW) babies who developed cerebral palsy (CP) had been exposed to magnesium in utero while 36% of VLBW babies who did not develop CP had been exposed to magnesium. In other words, VLBW babies who did not develop CP were more likely to have been exposed to magnesium in utero.

This surprising finding gave new energy to the magnesium enthusiasts and many were ready to declare that magnesium would prevent cerebral palsy in preterm infants, even before quality evidence was available. The numbers in the Nelson and Grether study sound impressive: 155,636 children enrolled, with 7% magnesium exposure in the CP arm compared to 36% exposure in the non-CP arm. But allow me to write a different summary that reflects the actual findings of the study:

We reviewed the charts of 155,636 births occurring between 1983-1985. We found 117 infants who were born weighing less than 1500 grams. 42 of these children went on to develop cerebral palsy while 75 did not. 39 of 42 children who later developed CP were not exposed to magnesium nor were 48 of 75 children who did not develop CP. We were unable to control for differences in quality of care at different nurseries or other institutional factors. It is possible (if not likely) that institutions using magnesium sulfate tocolysis in 1983 were tertiary care centers associated with higher quality neonatal care units.

So I have two points to make. First, perspective and bias matters when interpreting observational data. There are thousands of potential explanations of this data. Second, be careful not to be wowed by irrelevant data. Dozens of publications reported on this study by stating, “A retrospective study of over 150,000 children…” This is a way of intentionally biasing the reader. What if instead the first sentence were, “A small, retrospective study of 117 children with poorly controlled variables found …”? Nevertheless, this small retrospective study of 177 poorly matched children led to the MagNET trial.

In MagNET (Magnesium and Neurologic Endpoints Trial), women in preterm labor dilated less than 4 cm were randomized to magnesium versus another tocolytic, while women dilated more than 4 cm were randomized to magnesium versus saline. The trial was stopped at 15 months because of the high rate of pediatric mortality noted in the magnesium group (10 deaths in the magnesium group vs 1 in the placebo group; p=0.02).

The authors (Mittendorf et al.) later published other findings collected from the MagNET data. They found that:

- Newborns with higher ionized magnesium levels in umbilical samples were more likely to have intraventricular hemorrhage (IVH) and the perinatal demises were 14.8x as likely to have Grade III hemorrhage (p=0.025).

- Median umbilical ionized magnesium levels were higher among infants who died (0.76 mmol/L) compared to those who survived (0.55 mmol/L) and this finding was statistically significant (p-value = 0.03).

This type of dose-dependent relationship is very important as evidence of causation, rather than just correlation. The IVH data also supports the proposed biologic theory that magnesium may have an anti-platelet aggregation effect and therefore an anticoagulant affect. Indeed, 25% of infants who died had Grade III IVH compared to only 2% of survivors, with ionized magnesium levels of 1.00 mmol/L among infants with Grade III IVH compared to 0.67 mmol/L in infants with Grade I IVH. These data, too, were statistically significant. IVH in turn is associated with apneic events, a common causes of death among these infants. Let’s note that children who survive with Grade III or IV IVH are also far more likely to develop CP (meaning that one would expect a lower rate of survivors with CP if newborns with advanced CP were eliminated from surviving).

In order to address the question of whether a lower dose of magnesium exposure might have some protective effect against CP, the authors evaluated 67 surviving children and compared their neurodevelopmental scores to their serum ionized magnesium levels and found no statistically significant relationship.

Scudiero et al. in 2000 followed up MagNET with a case-control study at the Chicago Lying-In Hospital in which they found that exposure to more than 48 grams of magnesium was associated with a 4.72 odds ratio of fetal death. These findings were consistent with MagNET which indicated that the higher the dose of magnesium, the more likely the adverse event. Note that the typical tocolytic magnesium dose exposure is on average over 50 grams for a 48 hours course. This finding led advocates of magnesium to investigate low-dose magnesium protocols.

Crowther et al. in 2003 published results from the ACTOMgSO4 (Australasian Collaborative Trial of Magnesium Sulphate) study. They randomized low-dose magnesium versus placebo in 500 women and found no significant difference in the rate of cerebral palsy, which was the primary outcome studied.

There was an apparent “trend” towards fewer cases of CP and less developmental problems. “Trends” are code for “We didn’t find anything but surely it’s not all a waste of time.” Taking into account these trends, and presuming (which is quite a stretch) that the trends would continue over a larger population, Mittendorf et al. concluded that approximately 392 cases of CP could be prevented each year at the cost of 1900 infant deaths. Both of these numbers are, of course, incredibly speculative and add little to the discussion, but they are the results of using the then available data.

In December of 2006, Marret et al. published results of the PreMAG trial, in which 573 preterm infants at risk of delivery in the next 24 hours in 18 French hospitals were enrolled between 1997 and 2003 to receive either 4 gr of magnesium or saline. They found no statistically significant differences in outcomes among the two groups.

All of the studies available up until this point were reviewed by the Cochrane Database and they concluded:

…antenatal magnesium sulfate therapy as a neuroprotective agent for the preterm fetus is not yet established.

Finally, Rouse et al. in 2008 published results of the BEAM Trial (Beneficial Effects of Antenatal Magnesium), started in 1997 by John Hauth, after the highly flawed Nelson and Grether study was published. The idea was conceived in 1996. Hauth is a very influential advocate for magnesium; he and others wrote a critical response to the David Grimes editorial that boldly condemned magnesium sulfate tocolysis in 2007. Their letter begrudgingly concludes,

We agree that magnesium sulfate may not keep fetuses in utero, but the totality of the available data does not support that it in any way harms them.

I point this out only for consideration of bias on the part of the investigators and authors of the BEAM study. These authors were setting out to prove that magnesium was not the bad agent that people were claiming that it was, that they had not been harming women, and that it served some purpose, even if it ‘may’ not keep fetuses in utero. That sentence alone (and the name of the BEAM study) shows the bias. By the time Grimes wrote that editorial in 2006, we knew unequivocally that magnesium ‘did’ not keep fetuses in utero – no ‘may’ about it; but that admission is sometimes too much for those so cognitively dissonant.

Hauth and colleagues performed a retrospective pilot study on the effect of magnesium on neonatal neurologic outcomes which was published in 1998. The analysis of 799 VLBW infants found no difference in those exposed to magnesium compared to those who were not exposed. Still, the BEAM Trial went forward.

The BEAM Trial randomized 2241 women at risk for preterm delivery (mostly with preterm rupture of membranes) to magnesium versus placebo. The primary outcome to be studied was the incidence of moderate or severe cerebral palsy or death, and no significant difference was found between the two groups. Their conclusion,

Fetal exposure to magnesium sulfate before anticipated early preterm delivery did not reduce the combined risk of moderate or severe cerebral palsy or death…

And here the debate should have ended. The primary outcome was designed to be an aggregate inclusive of death and moderate or severe cerebral palsy because the data available at the time the trial was designed had at least suggested that a mechanism by which the number of survivors having moderate or severe CP might be reduced was through culling out the most vulnerable babies (the Grade III and Grade IV IVH neonates) by giving them a drug with anticoagulant properties that increased the risk of death. When I say ‘culling out,’ I am trying to find a respectful word for ‘killing’ since these are real human babies I’m discussing. Remember, the MagNET trial was ended prematurely because this hypothesis was supported by its unexpected findings. MagNET, ACTOMgSO4, PreMAG, a Cochrane meta-analysis, Hauth’s pilot study, and now BEAM had all failed to show a statistically significant decreased risk of cerebral palsy without, at the same time, causing excessive death.

But hope springs eternal for the true believer.

After failing to demonstrate a significant difference in the primary outcome of death or CP, two secondary analyses were performed: death alone and moderate or severe cerebral palsy alone. In other words, the primary aggregate outcome, for which the study was powered and designed to detect, was split into two separate outcomes.

For death alone, there was an excess of 10 fetal deaths in the magnesium group but this did not rise to the level of statistical significance. For moderate or severe cerebral palsy alone, there was an excess of 18 cases of CP in the placebo group and this was statistically significant (reported P value of 0.03). From this secondary analysis, subsequent meta-analyses were performed, and through the magic of faulty frequentist methodologies of statistic analysis, the trials previously discussed along with BEAM were repurposed to show that magnesium in fact was associated with a decreased risk of CP without an increased risk of death. Common sense alone tells us that the primary aggregate outcome of the BEAM Trial should have been statistically significant if this were the case, but in the shady world of meta-analysis, anything can and often does happen (particularly when the authors are agenda-driven).

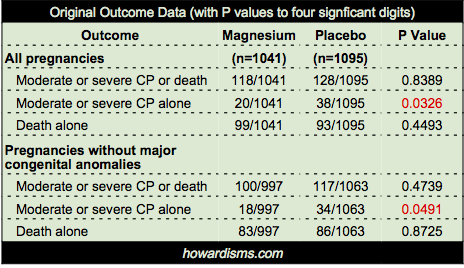

So a few points about BEAM’s conclusion that “the rate of cerebral palsy was reduced among survivors.” Below is the statistical data provided by the authors for the primary outcome (CP or death) and the two subset analyses (CP alone or death alone) for both ‘all pregnancies’ and ‘pregnancies without major congenital anomalies.’ Notice that the entire deck of cards is predicated on just two P values, shown in red:

First, a few points about these P values.

- I cannot stress enough that P values are not the sine qua non of finding meaning in a study. They are heavily abused and over interpreted. Read more about this here, if you please.

- Even when a P value passes an acceptable threshold (for example, less than 0.05), it still means that there is about a 5% chance that the finding is a false positive, so it should be interpreted in context of all available information.

- P values are only valuable for the primary outcome, due to the dependence of the p value on the power analysis which pre-specifies an appropriate size of enrollment in a study to produce the desired statistical significance. Indeed, over-enrollment in studies is a common problem and P values must be adjusted to reflect this. The authors of the BEAM Trial did this. They intended to enroll 2000 but eventually enrolled 2200. Therefore, they calculated that only “P values less than 0.043 were considered to indicate statistical significance.” They then add, inexplicably, “However, since the adjustment is minimal…” they did not use this adjusted value. What?

- So why does this matter? Note above that the P value for CP reduction in the magnesium group in all pregnancies was 0.0326; but its not quite fair to use this value because it is unadjusted for babies with major congenital anomalies. Thus, they also report the P value for reduction of CP in the non-anomalous infant group, which was 0.0491, still less than 0.05 though barely. However, the authors chose to report only one significant digit for the P values, so in the original paper this P value is reported as 0.04. Why? Because a careful reader or reviewer might note that 0.0491 is above the adjusted value of significance of 0.043 (they report both digits here), but by reporting only 0.04 they hide this fact. Unless a reader takes a minute to perform her own Fisher Exact Test, she will not know that 0.04 is not significant. Dishonest? Yes. Is this intentional? I have almost no doubt. P-hacking of some form or a other is rampant today. But here’s what the authors’ own data says: the reduction of moderate or severe CP in the magnesium group among non-anomalous infants was not statistically significant. Does this shock you? It gets worse.

- Nominal P values are not to be used in subset analyses without adjustment. In statistics, this is called the Multiple Comparisons Problem. Crudely explained, the more times the experiment is done, the more probable a false positive finding becomes, so the acceptable level of statistical significance must be adjusted down proportionately. One common way of doing this is simply to divide the accepted P value by the number of subset experiments; in this case, it would mean dividing by two. If we divide the nominal P value (o.o5) by two, then a new threshold for significance would be P < 0.025; but really we should divide the adjusted P value (0.043) by two, giving us an acceptable P value < 0.0215. Obviously, this procedure makes all of the reported P values in the above data table completely insignificant. If this sounds unorthodox, it’s not; even the authors know that it is an issue and they acknowledge “no adjustments were made for multiple comparisons.” Of course not, because honesty would stand in the way of a significant findings after ten years of work.

But why stop here? The authors also cleverly buried one additional point. There were four sets of twins in the study where one twin died and one twin survived with cerebral palsy. If you or I were designing this study, we would likely decide to exclude these four pregnancies, because in utero death of one twin is a significant risk factor for CP in the surviving twin, as much as a 25% risk. But what’s worse, the pregnancies were unevenly distributed: only one set was in the magnesium group while three were in the placebo group. Twins in general were unevenly distributed between the two groups: 92 in the magnesium group with 111 in the placebo group.

But the authors chose to make the denominator for all relevant calculations the total number of pregnancies, not the total number of fetuses. So the placebo group has a disproportionate number of fetuses compared to the divisor, which the authors didn’t fix because it only really mattered in these four pregnancies (though in fairness, the numerator of healthy babies would have been 19 bigger in the placebo group if they had adjusted it – they did not because this would dilute the beneficial effects they were hoping to find in the magnesium group). Confused? Don’t be; I have done what the authors should have done: excluded just the four mismatched twin pregnancies. Here is the data with those four pregnancies excluded:

That’s right. Not a single significant P value, even if the nominal (and incorrect) value of 0.05 is used.

Now the Bayesian reader of the BEAM Trial is not surprised by any of this, even if she did not take the time to redo the Fisher Exact Tests (and what good Bayesian would?). Why? The claim that there was a reduction of moderate or severe CP doesn’t make sense in the context of reported outcomes. Specifically, the authors report Bayley Scales of Infant Development, with subset that include Psychomotor Development (at two different thresholds) and Mental Development (again with two different thresholds). None of these four measures were different between the magnesium and placebo group. In fact, the best P value was 0.83. In other words, even if the reduction in the risk of CP were significant (it isn’t), then a better explanation of the data would have been that the subjective diagnosis of CP was greater in the magnesium group, but objective neonatal outcome did not support this difference.

If all this weren’t enough, here is another fatal flaw: data from a subset analysis should not be used to change clinical practice (in most cases). Rather, it is preliminary data that should be used to inform a sufficiently powered clinical trial to address the suspected finding. Why? Simply because the subset is not appropriately powered to find the differences of importance. For example, let’s say that the finding of a reduction in CP were significant (it wasn’t, but pretend), then it might be possible to say that this finding is statistically valuable, but the same subset analysis may not have been appropriately powered to detect a commensurate rise in the risk of death. In fact, using the authors’ nominal data, that is exactly what has happened. The increase in death in the “All pregnancies” group showed a rate of mortality of 8.5% in the placebo group versus 9.5% in the magnesium group. This full 1 percentage point increase represents 10 babies per 1000, and it is easy to do the math to see how many patients would need to be enrolled to test the statistical significance of this finding. Rarely are subset analyses sufficiently powered to answer such questions, particularly when the outcome is broken out of the larger aggregate outcome (death or CP) that the trial was initially powered to study.

Of course, death and CP are not equal in any way. How many survivors with CP is it worth avoiding to kill one baby? Is it 1:1, 5:1, 10:1, or some other number? I have shown explicitly the several reasons why there were no significant findings in the BEAM Trial, but if you are still holding out hope, then you need to consider this question. Ultimately, this is why the subset analysis is not meaningful. The change in CP rates and the change in death rates, the trends at least, are not equal, so a larger study is needed to observe if the change in death is real. Then a value judgment can be made about death versus living with CP. Physicians who are using magnesium for this purpose should be discussing this value with their patients.

At last, the BEAM Trial is just like the studies that had come before it: it suggests that CP may be reduced because the total number of survivors are reduced, but the findings do not rise to the level of statistical significance. The appropriate outcome to be studied was the combined outcome of death and CP, which again showed no significant difference even by the authors’ own attestation. Another way of looking at the data is to think about the total number of intact survivors in each arm of the study: 88.7% of the infants in the magnesium group were intact, whereas 88.3% of the survivors in the placebo group were intact. And if we take out the 4 sets of twins with death/CP that were distributed unevenly, then those numbers change to 88.75% and 88.56% respectively. Neither set of these numbers is anywhere near statistically significant and obviously not clinically significant.

Some other problems:

- None of these studies have used consistent doses of magnesium. PreMAG gave only 4 grams total while the BEAM Trial used tocolytic dosages (the real intention of the authors after all was to validate their own practices). The bulk of the evidence shows that this larger dose of magnesium exposure is associated with an increased risk of neonatal death, and it is likely that if the trial were better powered, the 1%-point increase in mortality would stay consistent.

- At the time of publication, an accompanying editorial in the NEJM did not recommend the use of magnesium for this indication. ACOG still does not, but it doesn’t say not to either (the same stance it takes in regards to tocolytic usage). Don’t underestimate the politics at play regarding this issue. Thought leaders are deeply divided. Baha Sibai wrote a cogent response to the BEAM study in a 2011 debate in the Gray Journal that was harshly criticized by Rouse with ad hominem attacks. One camp acknowledges that no statistically significant findings have come forth to justify making the routine use of magnesium for this indication a standard of care, while the other camp doesn’t care about evidence based medicine and the relevant statistics. Dwight Rouse (lead investigator of the BEAM Trial) wrote a response to a letter by Mittendorf that was critical of the BEAM Trial. In the response, Rouse not only digs-in regarding the BEAM Trial, but he also claims that the ACTOMgSO4 trial and the PreMAG trial agree with his findings. Of course, neither of those studies concluded that there was a statistically significant difference between magnesium and placebo in regards to neonatal outcomes, so I guess in a way Rouse is telling the truth, but statements like these, parroted in a thousand review articles, are misleading to readers who almost never check the references. Unfortunately, both readers of scientific articles and reviewers and disseminators of scientific articles act like lemmings marching off the cliff.

- Follow-up studies from the BEAM data have failed to show differences in measurable effect based on dose or length of exposure to magnesium and have also failed to show significant radiologic differences in neonates (specifically head ultrasounds). Both of these facts tend to hurt any potential causal arguments relative to magnesium and the prevention of cerebral palsy. Also, studies have shown that magnesium did not affect time to delivery (yes, they were still hoping).

The follow-up meta-analyses that have been published, including the favorable Cochrane review, are distorted due to the statistical games played in the subset analysis of the BEAM Trial. If the subset analysis is restored to is true significance, then the meta-analyses fall apart. As a larger lesson, this shows how important intellectual honesty is in the way data is presented. The BEAM Trial has been cited in over 300 papers since it was published, and it has all but replaced the significance of the previous studies with which it attempts to disagree. This shows the confirmation bias of the many authors who have latched onto the study who ignore the other data because this paper tells them what they want to hear: Magnesium is good.

Usage of magnesium for premature labor, after a gentle decline in the early 2000s, has now exploded again and a generation of physicians who could not stand against the march of evidence that destroyed magnesium as an effective tocolytic now feel justified again. Meanwhile, women and children suffer (for example, this large 2015 study found that infants exposed to magnesium had a 1.9 fold higher NICU admission rate after adjusting for other variables).

When we are not disciplined in utilizing evidence based medicine to inform our decisions, it sometimes takes generations to overcome the mistakes made. Literally hundreds of years were spent bleeding women for eclampsia. Fifty years has been wasted on a magnesium as a tocolytic, and many are still using it for this indication today. And now the bait-and-switch of prevention of cerebral palsy. It doesn’t have to be another fifty years before we realize that this too was a false path; we just need to apply rigorous techniques to the data we have, consider the pre- and post-study probabilities of this hypothesis, and adjust those probabilities as new data emerges.

Prior to the BEAM Trial, the pre-study probability that magnesium had a beneficial effect on neurological outcomes of exposed neonates was very low. BEAM did not change this. New data will emerge. For example, in 2014, long term pediatric data was published regarding the children who had been enrolled in the ACTOMgSO4 trial. I’ll bet you can already guess the conclusion:

Magnesium sulfate given to pregnant women at imminent risk of birth before 30 weeks’ gestation was not associated with neurological, cognitive, behavioral, growth, or functional outcomes in their children at school age…

The authors of the PreMAG trial also performed school age follow-up on children enrolled in their study and found no difference in outcomes. Interestingly, these are the two trials which Rouse cited as support for the BEAM Trial and their statistics have been perverted by subsequent meta-analyses.

Magnesium is great for the treatment of preeclampsia/eclampsia. Let’s stop pretending it’s a panacea.