Published Date : November 27, 2016

Categories : OB/Gyn

I recently came across this quote from an article in The American Journal of Surgery:

Then gynecologists became enthused over this new surgery and departed from their vaginal technique in order to combine their indicated pelvic work with these new operative wrinkles. In consequence, vaginal surgery became neglected and recently one could find busy clinics where not a single vaginal hysterectomy had ever been performed either by the present staff or by their predecessors. … Since the vaginal approach to pelvic disease is associated with less mortality, a small morbidity rate and a much more rapid convalescence, it is high time that present day gynecologists learned to appreciate the value of vaginal hysterectomy, acquire its technique, and extended its use.

I couldn’t have said this better myself. Reflecting back over the last twenty years, we have seen the time-honored and premier approach of vaginal hysterectomy slowly and painfully displaced by “new wrinkles” that have gained gynecologists’ enthusiasm. First, it was laparoscopy. This once novel approach appealed to the gynecologist’s desire to use the latest and greatest technology and toys – even if it came at a cost to women. At first, most laparoscopic hysterectomies were done as supra-cervical cases, as gynecologists slowly crawled up the learning curve. Leaving the cervix behind exposed women to risks, like cervical dysplasia and cancer, future prolapse, continued bleeding, and a possible future need for a trachelectomy. Once the cervix was within more gynecologists’ wheelhouse, a new cost to women arose: a near 10-fold increased risk of vaginal vault prolapse, along with significantly higher rates of bladder injuries, bowel injuries, and ureteric injuries. All the while, laparoscopic hysterectomy still trailed far behind vaginal hysterectomy in terms of cost, length of stay, cosmesis, and patient satisfaction.

Over time, vaginal surgery developed an increasingly-present crutch: laparoscopic-assistance. This tool was usually more a bandaid for declining vaginal skills than a true necessity. Pure vaginal hysterectomies were becoming relegated to surgeons over 50 years of age and to women with significant prolapse.

Soon enough, the next new operative wrinkle was the robot. The robot was supposed to fix many of the problems that the laparoscope had created: e.g., neck strain and back pain for the surgeon – while leading to improved exposure and instrument flexibility, etc. Yet, the only thing that really changed was an increased length of surgery, more and bigger holes on the abdomen, and substantially increased costs. Vaginal surgery, though, continued to fall into neglect and year after year it has become harder and harder to teach the next generation of gynecologists this cornerstone of our speciality due to fewer cases and poorer skills among attending physicians. A recent ACOG survey estimated that not even 1/3 of OB/GYN residents going into fellowship training are able to perform a vaginal hysterectomy independently.

Thus, as the quote above proclaims, today there exists whole clinics where vaginal hysterectomy is a lost art; where it is performed, it is usually woefully under-utilized. But I must confess something to you: the above quotation was written by N. Sproat Heaney in 1940, some 76 years ago! You see, the robot and the laparoscope are not the first inferior tools to come along and threaten the existence of the vaginal hysterectomy. The first threat was the abdominal hysterectomy.

The first modern vaginal hysterectomy was performed by Conrad Langenbeck in 1813 (though vaginal hysterectomies for prolapse date back over 2000 years). It wasn’t until 1843 that Charles Clay did the first abdominal hysterectomy. Vaginal hysterectomy remained preeminent until the 1900s, when Howard Kelly, one of the “Big Four” at Johns Hopkins, decided that huge abdominal incisions were much better and therefore popularized the abdominal approach. Most other gynecologists joined in lock-step. They felt like “real” surgeons with their expansive incisions and two week hospitalizations.

Kelly promised better exposure than the vaginal route, and an opportunity to do other things while there, like appendectomies and the opportunity to work on any other pathology that might be discovered during this exploratory laparotomy. Sound familiar? Kelly and his followers exposed generations of women to unnecessary invasiveness and often unnecessary surgeries for what we now know today were just anatomic deviations. Heaney, in the above quote, gives as an example the surgical correction of Jackson’s membrane and he chides gynecologists who chose to “invade the abdomen for ‘chronic’ appendicitis.”

Well, at least nothing much has changed.

Heaney was courageous for standing up against the fads of his time and putting the health of women first. Might we be ever so valiant.

Published Date : November 27, 2016

Categories : Cognitive Bias, OB/Gyn

Tallinn, Estonia

In ancient times they had no statistics so they had to fall back on lies. – Stephen Leacock

In almost every situation where data can be collected and analyzed, we are faced with comparisons of that data to itself. This is sometimes a useful technique – but not always. For example, school grades can be used to compare one student to another, identifying the top 10%tile of students as well as the bottom 10%tile of students. This might have meaning; it might be that the top 10%tile deserve scholarships and other opportunities, while the bottom 10%tile deserve remediation. We naturally stigmatize the bottom 10%tile and applaud the top 10%tile. But this can be, in certain situations, wholly artificial.

Is the goal of education to stratify students into percentiles? No. The goal is for students to achieve competency in a curriculum. Not all students will achieve competency in a given curriculum, particularly as the subject matter gets more difficult (achieving competency in kindergarten has little correlation with achieving competency in medical school). But, in any event, the goal should be that students achieve some predefined level of competency. That’s not to say that some students won’t achieve that goal easier than others do, nor do I claim that comparing students to one another is unimportant. We certainly do need to identify those advanced students so that they can attempt more advanced competencies. But my point is that when we compare groups of students to one another, that is a different thing from deciding whether a student is competent. In other words, belonging to a certain percentile, in and of itself, is absolutely meaningless.

A class full of 100 high-achieving students may all one day have great academic success and they may all be competent in their chosen endeavors; nevertheless, one of them still constitutes the bottom 1%tile. Similarly, a class full of 100 poor achieving students, none of whom will ever achieve significant success, still has someone in the top 1%tile. Thus, the sample group being compared to itself matters immensely. We know this already from life experiences. Earning a B grade in an advanced physics class at MIT surely has more merit than an A grade earned in an introductory science course in a community college. But this false comparison, forced on us by what I call the Percentile Fallacy, applies to all sorts of descriptive statistics.

Consider, for example, the 50 best countries in the world as ranked in regards to maternal mortality (or any other metric you might be interested in). The difference between 1st and 50th may be substantially insignificant. In fact, considering error in collecting data (poor reporting, differences in labeling, sampling size, etc.) along with differences in populations (e.g., differences in risk factors among the various populations), there may be no real substantial difference between 1st and 50th place that’s not attributable to chance alone (and therefore just noise in the data). But, yet, some country will always come in 1st place and some country will always come in 50th place.

I won’t spend a lot of time dissecting the problems with maternal mortality reporting around the world, but note above that Estonia is ranked 1st in this report while the US is ranked 47th. Does Estonia really have the most advanced maternity care in the world? No.

Estonia averages about 14,000 births per year (compared to about 4 million in the US). The small sample size of countries like Estonia and Iceland tend to make them look very good (often having just 0 or 1 maternal death in a given year). There are single hospitals in the US that deliver more babies per year than Estonia or Iceland (and I personally have delivered more babies per year than Liechtenstein, Monaco, and San Marino). Women with high risk conditions in Europe tend to travel out of these countries for maternity care, so the sample is diluted of complicated patients. Throughout different countries of the world, wide variation exists about what is considered a maternal death and how these deaths are reported and collected. Is any women with a positive pregnancy test who dies considered a maternal death? No, not ideally. In theory, the pregnancy should contribute to the death. So should a woman who dies of pneumonia or a pulmonary embolism four weeks after delivering a baby be considered a maternal death? These types of decisions are not made uniformly.

In the US, various projects aimed at increasing local identification of pregnancy-related deaths have found both significant under-counting of pregnancy-related deaths and also significant over-counting. We simply don’t know the real rates even with complicated tracking and recording systems. So how well can we expect Slovakia, with fewer obstetric resources than the state of Tennessee, to do with tracking its true maternal mortality rate? Yet Slovakia, according to the data above, has almost one-third the rate of maternal mortality of the United States. The truth is we cannot really use this data for a like-kind comparison. We can use data from 2014 Slovakia to compare it to 2013 Slovakia, but not to 2014 Oregon.

But these insignificant differences are parlayed by special interest groups and policy-makers into a crisis that needs rescuing for those poor, bottom-dwelling, low-percentile countries like the United States. These types of comparative statistical errors are rampant in nearly every aspect of science and public policy. As long as we have 50 states, some state will rank first and some state will rank 50th in every imaginable statistical category; and some politician or special interest group will exploit that 50th place ranking as a reason to change policies or laws, spend more money, and raise the public angst. But some state will always be 50th. Some country will always be near the last, and some student will always be near the bottom percentile.

The “problem” is not the country, state, or student, the problem is what I call the Percentile Fallacy. The percentile fallacy occurs whenever one member of a group is thought to be problematic compared to another member of the group not because they have failed to meet some objective standard but because they have to be on a different part of the bell-shaped curve.

Hospitals are guilty of the percentile fallacy every day. Hospitals collect troves of data comparing themselves to other hospitals. They may all be excellent (or they may all be horrible) but some hospitals will always constitute the “top performers” and others will be basement dwellers. What’s worse, reimbursement for services is moving towards the same erroneous formula, where hospitals and doctors who are in the top percentiles of some metric are rewarded with more money while those in the bottom percentiles are penalized by having money taken away. This is a zero sum game (which is why payers like it) and it doesn’t really identify competency (which should be the real goal). The top percentile performers may be woefully incompetent in some category and have no real incentive to improve, so long as they are just a teeny bit less crappy than everyone else. And in the other extreme, virtually every hospital or provider may be doing a good, competent job, but some will still be penalized because there will always be those bottom percentiles. We need thresholds of good performance, not percentiles.

The percentile fallacy is pervasive in life. It does little to promote actual competency and rather promotes learning how to play the data-collection game well. It rewards those who understand the formula, which often has little basis in true competency. In healthcare, most performance metrics are picked because of ease of access to data that can be computed by some central organization. For example, in obstetrics, a widely proposed “quality metric” may be whether all pregnant women are screened for gestational diabetes during their pregnancies. This test is chosen not because it is important but because it assumed by policy makers that it should be occurring universally and because the data is available (since a charge is submitted by the physician for this service).

Now the competent, evidence-based approach to this issue of screening is to screen first with history and then to perform a chemical screen on high and average risk women (not low risk women). But because this artificial measure of competency is erected, all physicians will start doing the sometimes unnecessary (and sometimes harmful) chemical screens even on low risk women. The bottom-dwelling outliers are not likely to be generally incompetent obstetricians but, rather, ethical, evidence-based providers who couldn’t bring themselves to harm their patients by doing the wrong thing.

Eventually, in such an incentivized system, physicians will all play the game and make sure every patient gets screened (no doubt some will submit charges even if the test didn’t actually get done so that they don’t get financially harmed). They will all learn to play the game. This could be used for good if physicians were incentivized to do something truly essential, but there are few examples of this type of incentivization. So what happens when the top performing physicians all screen 100% of their patients and the bottom performing physicians all screen 99.5% of their patients? Well, there will still be a top quartile and a bottom quartile, and someone will get bonused for no good reason and someone will get penalized for no good reason. This is the percentile fallacy in action.

Put another way, the percentile fallacy leads to harm whenever it is inappropriate to make such comparisons. We shouldn’t be interested in such false comparisons; rather, we should be interested in certain levels of performance or competency.

Here’s another example: Let’s say we instituted a quality measure than stipulated that any obstetrician with a total cesarean delivery rate less than 25% will be financially rewarded. This reward should be available to every single doctor, if they can achieve the standard. This is a competency based approach. The alternative is to use percentiles. If we used the percentile instead, physicians will all aim for the better percentile and work to lower their cesarean delivery rates; this could be good in the short term. But eventually, the best performing docs, having been overtaken or matched by everyone else, will strive for even lower cesarean rates. This would be acceptable incentivization if a cesarean delivery rate of 0% were desirable – but it isn’t, and we don’t know what rate is too low. Eventually, patients will be harmed by physicians not performing appropriate cesareans so as to maintain their prime reimbursement status. In this way, and in thousands of comparable examples, the percentile fallacy is harmful.

I started with an education example, and this is an important misuse of the fallacy. Our goal in education has to be competency. Students should not receive credit for a course or a degree if they cannot perform certain skills and possess certain knowledge and attitudes. We encourage students to game the system with our current system of standardized tests, a pump-and-dump attitude towards knowledge, and little emphasis on critical thinking skills. Thus, the majority of people who have graduated with degrees are incompetent. Don’t believe me? You probably made an A in calculus in college (if you are reading this); care to evaluate some indefinite integrals? I didn’t think so. Of course, that probably wasn’t the goal of your calculus course; but if it wasn’t, then what was? We must clearly define expected goals and competencies, or nothing has meaning. What are the competencies of a good physician? How should we define those (and demand them)? Our system of merits must enforce these competencies, but our current system rewards unethical behavior and patient harm.

Published Date : November 27, 2016

Categories : OB/Gyn

Here are some sample operative notes for obstetrics and gynecology. Feel free to download and modify these for your use. We will add others from time to time, and make corrections or modifications as needed. So far there are examples for:

Published Date : November 22, 2016

Categories : Evidence Based Medicine, OB/Gyn

One of the great limitations of physical exam is that many (if not most providers) don’t do it very well. I have previously recommended the Stanford 25 as an excellent resource to enhance physical exam skills. In the case of clinical breast exam (CBE), the literature has been increasingly unkind to this stalwart of gynecologic practice. The American Cancer Society questions the value of CBE alone to detect worrisome lesions, though it may serve a role in reducing the number of false positives associated with mammography. In 2015, they stopped recommending that CBE be performed at all, which is in line with recommendations from the US Preventative Task Force Service.

Thus, clinical breast exam may be going the way of self-breast exam (SBE). SBE hasn’t been widely recommend in nearly 20 years; it has never been shown to decrease mortality from breast cancer, but it has been associated with an increased number of patient complaints stemming from benign issues – and an increased number of biopsies and interventions to go along with these complaints. From this perspective, SBE (in low risk women) is a net harm: increased interventions and risks with no betterment in outcomes.

Obviously, a major problem with SBE is that it is performed by incompetent examiners. Women may know their own breasts very well, but they are usually ill-prepared for performing the proper techniques and understanding how their breasts change with time of life, pregnancy, weight changes, hormonal status, etc. It is hardly surprising that SBE is net harmful. If we gave people stethoscopes and told them to listen to their own hearts for murmurs, we wouldn’t be surprised if this were also an ineffective strategy. But should cardiologists not listen to hearts?

I am suggesting that CBE may have shown poor utility in clinical studies primary because most providers are not doing the exam correctly. We don’t have studies that control for the methods used for CBE and the effect of appropriately-performed CBE on mortality; we do have evidence that CBE reduces false positive discoveries when used in conjunction with mammography or MRI and we have evidence that CBE may improve patient compliance with mammography. We have evidence that appropriate technique for CBE can greatly increase the sensitivity for smaller lesions. We also know that CBE is useful for symptomatic patients and perhaps for high-risk patients. In order to maximize these benefits, we must be as good as we can be as CBE. Perhaps with more widespread use of good technique, CBE might show promise as a primary screening tool again in average risk women, particularly in the coming era of fewer screening mammograms and later onset of mammography (age 50 and up).

Remember, the goal of mammography is to detect lesions before they are palpable, while the goal of CBE is to detect early, palpable lesions. So studies which compare the efficacy of CBE to mammography will never show that CBE is of value, though it may produce fewer false positives (less over-diagnosis). However, if mammography becomes routine starting at age 50 (as opposed to age 40), then CBE (done well) may show value in detecting small lesions that are not otherwise detected since mammography won’t be routinely used in these populations. CBE is 58.8% sensitive and 93.4% specific for detecting breast cancer; this may not be good compared to mammography but it is excellence compared to no screening at all. While it is true that no current data shows a mortality benefit for CBE, it is also true that no current study shows a mortality benefit for mammography.

Let’s look at four essential skills for a quality clinical breast examination.

1. Cover all of the tissue systematically (use vertical stripes).

The breast is a comma shaped organ with its tail extending upwards into the axilla. All of the breast tissue needs to be systematically palpated. Popular methods for this include circles of increasing size, starting from the nipple and moving outward; wedges radiating to or from the nipple in different radial directions, and vertical stripes (or horizontal stripes, the ‘lawn mower method’). Multiple studies have shown that the vertical stripes method is at least 50% more sensitive than other methods and leads to more consistent coverage of breast tissue. The data is very clear that this the only acceptable method for SBE and CBE.

2. Use the pads of three fingers, one hand at at time.

The most sensitive parts of the fingers are the pads of the digits, not the tips. As in the drawing above, the pads of three fingers should be used for palpation. Many providers will use the tips of the fingers, pushing down in alternating motions (as if typing or playing the piano); this method is essentially worthless to detect small tumors (less than 1 cm). Additionally, using only one hand at time enhances your brain’s ability to process all of this sensory information accurately. While bimanual haptic perception (using both hands) is beneficial for identifying large objects (cylinders, curved objects, horses, etc.), this is not the case for fine touch. There is integration of sensory information from digits which are from the same somatosensory map (for example, the middle three fingers) but not from different maps (for example, the thumb and little finger or fingers from two different hands) for fine touch. So use the pads of the middle three fingers and only use one hand at a time (of course you may need the other hand for positioning of a large breast, but not for simultaneous palpation).

3. Make overlapping circular motions.

The best way to feel a nodule underlying superficial layers of skin and fat is to make circular motions (circumscribing a radius about the size of a dime) with your fingers. This will make skin and fat layers roll over the lump and the lump will stand out. Simple downward compression is far less effective at detecting an underlying mass. Multiples studies have shown that small circular motions greatly enhance palpation technique for breast exam compared to any other method.

4. Use three levels of pressure (compression).

Because tumors may be relatively superficial just under the skin, very deep next to the chest wall, or anywhere in between, then compression of the breast tissue in three specific levels is appropriate for most breasts. A deep tumor may not “roll” under the fingers with light pressure, and a superficial tumor may not “roll” under the fingers with too firm (deep) pressure. Combined with a circular motion, these three levels of palpation can be executed in one successive movement: after placing the three fingers, one rotation with light pressure, then intermediate pressure, then full pressure. Simply move your hand over with about a finger’s breadth of overlap and repeat.

Bonus tips.

Don’t routinely attempt to express fluid from the nipples. This painful maneuver has no clinical utility in asymptomatic women and produces a large number of false positive (most women who have had children can be made to discharge fluid).

Don’t teach your patients to perform self-breast exams or to practice self-breast awareness, unless they are in a high-risk group for breast cancer. These practices have been long abandoned and have been shown to be harmful. If the patient is in a high-risk group, then the four elements described here are essential to educating your patient.

Don’t forget about palpation of axillary and clavicular lymph nodes and inspection for nipple symmetry, etc. These elements of the breast exam are most important for women in whom you suspect a mass or who are otherwise high-risk.

Don’t worry about timing. Studies are mixed about how long it should take to perform an exam, and it clearly will vary considerably based upon breast size and the complexity of what is being palpated. Still, if a whole breast exam takes 20-3o seconds, you probably are not using these techniques.

Bottom line.

These principles are the core of the MammaCare breast examination method and have been shown to greatly enhance the ability of CBE to detect small lumps compared to other methods. A large body of clinical research exists showing the superiority of these combined methods. Only a small percentage of providers utilize these four essential elements, and therefore the efficacy of CBE is greatly diluted. CBE should become more important in the years to come as women more often do not receive mammography until age 40, and hopefully future studies will focus on the utility of CBE with appropriately employed techniques.

Published Date : November 21, 2016

Categories : #FourTips, OB/Gyn

Here’s a new video with four tips to hopefully make vaginal hysterectomy a bit easier. If you haven’t yet, take a look at this post on vaginal hysterectomy.

Published Date : November 15, 2016

Categories : Cognitive Bias

A lot of folks on both ends of the political spectrum were shocked at the results of the recent national election. Virtually every poll and pundit had not only predicted a fairly easy victory for Hillary Clinton but also had predicted that the Democrats would retake the Senate. Neither happened and, in retrospect, neither outcome was even really that close. A lot of analysis has followed about what went wrong in the prediction business. Were the polls that wrong? Are the formulae that are used to predict outcomes that skewed? This has not been the case in the last two presidential elections. The fact that polls have been so accurate in recent elections made the results of the election, in most people’s eyes, all the more unbelievable. Did people lie to the pollsters? Were folks afraid to say that they were planning to vote for Trump? Did they lie to the exit pollers about their votes? Were the pollsters in the bag for Hillary so much so that they made up data or manipulated the system?

The results of the election were counterintuitive to those leaning left and so the fact that it happened is hard to reconcile. The results were also surprising to many of those leaning right, particularly those who had too much faith in the normally reliable polls. What can we learn from this error of polling science and how does it apply to medical science?

As I said above, virtually every pollster was wrong, and those who have too much faith in the science behind polling are the ones who were most shocked about the outcome of the election. As a general rule, an increasing number of people have too much faith in science in general (we call these folks Scientismists). Science has become the new religion for many people – mostly college educated adults, and even more so those with advanced degrees. As with many religions, Scientism comes with false dogma, smugness, self-righteousness, and intolerance. Anyone who disagrees with whatever such a person might consider to be science is dismissed as a close-minded bigot. Facts are facts, they will claim, and simply invoking the word ‘science’ should be enough to end any argument.

But this way of thinking is absurdly immature. Those who believe this way have made the dangerous misstep of separating science from philosophy (see below). You cannot understand what a fact even is (or knowledge in general) without understanding epistemology and ontology which are, and forever will be, fields of philosophy. An interpretation of science in the rigid, dogmatic sense that most scientism bigots espouse is incompatible with any reasonable understanding of epistemology and is shockingly dangerous.

History is full of scientific theories that were once widely believed and evidenced but which today have been replaced by new theories based on better data. Talk of geocentrism, for example, just sounds silly today, along with the four bodily humors in medicine or, perhaps, the hollow earth theory. But not too long ago, these ideas were believed with so much certainty that anyone who might dare to disagree with them did so at his own peril.

Now I am not making the science was wrong argument, which is too often used to support non-sensical ideas like the flat earth or other such woo. True science doesn’t make dogmatic and overly-certain assertions. Scientific ‘knowledge’ is usually transitory and true scientists should constantly be seeking to disprove what they believe. Science is not wrong, but, rather, people have been wrong and used the name of science to justify their false beliefs (and in many cases persecute others). Invoking science as an absolute authority is wrong (and dangerous). I would remind you that physicians who believed in the four bodily humors and/or denied the germ theory of disease in the 19th century felt just as certain about their beliefs as you might about, say, genetics. They were making their best guess based on available data, and so do we today; but we should be open to other theories and new data. Don’t be so dogmatic and cocksure.

So the first lesson: If you truly understood science, then you would believe in it far less than you likely do. Facts are usually not facts (they are mostly opinions). It takes a keen sense of philosophy to keep science in its place. But be leery of those who start sentences with words like, “Science has proven…” or “Studies show…”

How does this apply to the polls? Polls are scientific (as much as anything is) but that doesn’t make them free of bias or other errors. The wide variation in polls and the vastly different interpretation of polls is evidence enough that the science of polling is far from exact. More than random error accounts for these differences; there are fundamental disagreements about how to conduct and analyze polls and subjective assumptions about the electorate that vary from one pollster to the next.

A voter poll is not a sample of 1000 average people that reports voter preference for a candidate (say 520 votes for Candidate A versus 480 for Candidate B). We don’t know what the average person is nor can we quantify the average voter. So a pollster collects information about the characteristics of the person being polled (gender, ethnicity, voting history, party affiliation, etc.) and then asks who that person is likely to vote for in the election. The poll might actually record that 700 people plan to vote for Candidate B while 300 plan to vote for Candidate A, but this raw data is normalized according to the categories that the pollster assumes represent the group of likely voters. This raw data is transmuted (and it should be) so that the reported result might be 480 for Candidate A and 520 for Candidate B. But this important process of correcting for over-sampling and under-sampling of various demographics is a key area where bias can have an effect. Assumptions must be made and the pollster’s biases invariably affect these assumptions.

Such is the case for most scientific studies. If you already assume that caffeine causes birth defects, you are more likely to interpret data in a way that comes to this conclusion than if you did not already assume it. Think about this: dozens of very intelligent pollsters and analysts all worked with data that was replicated and resampled over and over again and all came to the wrong conclusion. They had a massive amount of data (compared to the average medical study) and resampled (repeated the experiment) hundreds of times; yet, they were wrong. How good do you really think a single medical study with 200 patients is going to be?

Have you ever wondered why it is that someone who does advanced work in mathematics has the same degree as someone who does advanced work in, say, art? They both have Doctorates of Philosophy because they both study subjective fields of human inquiry. Science is philosophy. We all look at things in different ways and our biases skew our sense of objective reality (they don’t skew reality, just our understanding of it). This is true even in fields that seem highly objective, like mathematics. A great example of this is in the field of probability. It really does matter whether or not you are a Bayesian or a Frequentist. Regardless of which philosophy you prefer (did you not know that you had a philosophical leaning?), you have to make certain assumptions about data in order to apply your equations, and here is where our biases rush in to help us make assumptions (not only am I a Bayesian, but I prefer the Jeffreys-Jaynes variety of Bayesianism). How you conduct science depends on your philosophical beliefs about the scientific process; how you analyze and categorize evidence and facts depends on your views of epistemology and ontology.

If two people can look at the same data and draw different conclusions, then you know that bias is present and not being mitigated adequately. We all have bias; but some are more aware of it than others. Only by being aware of it can we begin to mitigate it. We cannot (and shouldn’t try) to get rid of bias. Those who think that they are unbiased are usually the most dangerous folks; they are just unaware of their biases (or don’t care about them) and, in turn, are doing nothing to mitigate them. This is the danger of Scientism. The more you believe that you are right because ‘science’ or ‘facts’ say so, the less you truly know about science and facts and the less you are mitigating your personal biases. Bias is not just about your predisposition to dislike people who are different than you, and perhaps we should use the phrase cognitive disposition to respond as a less derisive term, but bias is shorter and easier to understand. If the word makes you uncomfortable, then good. Bias is easy to understand in the context of journalism and pollsters; most political junkies are aware that over 90% of journalists are left-leaning. Bias is harder for people to understand in the context of medical science, but the impact on outcomes (poll results, study findings, the patient’s diagnosis) is the same.

Thus, while the pollsters and pundits used science to inform their opinions of the data, most were unaware of how they were cognitively affected by their philosophies. In some cases, this means that they used statistical methods that were inappropriate, and in other cases it means that their political philosophy (and their assumptions about how people think and make decisions) misinformed their data interpretation. This happens in every scientific study. The wind is always going to blow, and your sail is your bias. You can’t get rid of your sail, you just have to point it in the best way possible. The boat is going to move regardless (even if you take the sail down). William James, in The Will to Believe, said,

We all, scientists and non-scientists, live on some inclined plane of credulity. The plain tips one way in one man, another way in another; and may he whose plane tips in no way be the first to cast a stone!

The plural of anecdote is not data. ― Marc Bekoff

How we interpret (and report) data affects how we and others synthesize and use it. Does the headline, “College educated whites went for Hillary” fairly represent the fact that Hillary got 51% of the college educated white vote? Maybe a better headline would have been, “Clinton and Trump split the white, college-educated vote.” Even worse, the margin of error for this exit-polling “fact” is enough that the truth may be that the majority of college-educated whites actually preferred Trump in the election. But the rhetorical implications of the various ways of reporting this data are enormous. Reporters and pundits make conscious choices about how to write such headlines.

Would you be surprised to know that Trump got a smaller percentage of the white vote than did Romney and a higher percentage of the Hispanic and African American vote than did Romney? But since fewer whites and non-whites voted in the 2016 election than in the 2012 election, we could choose to report that data by saying, “Trump received fewer black and Latino votes than Romney.” But so did Hillary compared to Obama. Fewer people voted in total. Obviously the point I am making is that how we frame (contextualize) data is immensely important and what context we choose to present for our data is subject to enormous bias.

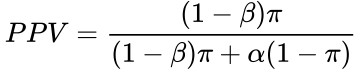

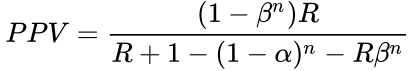

I have made these points before in Words Matter and Trends… as these issues apply to medicine. Data without context is naked, and we must be painfully aware of how we clothe our data. Which facts we choose to emphasize (and which we tend to ignore) are incredibly important. How facts are spun as positives (or negatives) are ways that we bias others to believe our potentially incorrect beliefs. Science is just as guilty of this faux pas as are politicians and preachers. Have you ever said that a study proves or disproves something, or that a test rules something in or out? If you did, you are guilty of this same mistake. Studies and tests don’t prove things or disprove them, they simply change our level of certainty about a hypothesis. In some cases, when the data is widely variable and inconsistent, or when the test has poor utility (low sensitivity or specificity), a test or study may not change our pre-test probability at all. This was the case with polling data from the 2016 election: our pre-test assumption that a Clinton or Trump victory was equally likely should not have been changed at all given the poor quality of the data that was collected. This is also true of most scientific papers (for more on this concept, please read How Do I Know If A Study Is Valid?).

I want to pause here and talk about this notion of consensus, and the rise of what has been called consensus science. I regard consensus science as an extremely pernicious development that ought to be stopped cold in its tracks. Historically, the claim of consensus has been the first refuge of scoundrels; it is a way to avoid debate by claiming that the matter is already settled. Whenever you hear the consensus of scientists agrees on something or other, reach for your wallet, because you’re being had. Let’s be clear: the work of science has nothing whatever to do with consensus. Consensus is the business of politics. Science, on the contrary, requires only one investigator who happens to be right, which means that he or she has results that are verifiable by reference to the real world. In science consensus is irrelevant. What is relevant is reproducible results. The greatest scientists in history are great precisely because they broke with the consensus. There is no such thing as consensus science. If it’s consensus, it isn’t science. If it’s science, it isn’t consensus. Period. ― Michael Crichton

I cannot say this better than did Crichton so I won’t try. The consensus of the polls and pundits was wrong. Similarly, scientific consensus has been wrong about thousands of other scientific ideas in our past. Consensus is the calling card of Scientism. Galileo said, “In questions of science, the authority of a thousand is not worth the humble reasoning of a single individual.” Often what we call scientific consensus would be more accurately termed “prevailing bias.” This type of prevailing bias is dangerous when it leads to close-mindedness and bullying.

Scientific questions like, “Why were the polls so wrong?” or, “What causes heart disease?” are complex and multifactorial. We don’t know all the issues that need to be considered to even begin to answer questions like these. Humans naturally tend to reduce such incredibly complex and convoluted problems down into over-simplified causes so that we can wrap our minds around them. Here’s one such trope from the election: “Whites voted for Trump and non-whites voted for Clinton.” This vast oversimplification of why Trump won and Clinton lost is frustratingly misleading, but if you are predisposed to want to believe that this is the answer, then those words in The Guardian will suffice. Why not report, “People on the coast voted for Clinton and inland folks voted for Trump,” or “People in big cities voted for Clinton and people in smaller cities and rural areas voted for Trump,” or old people versus young people, rich versus poor, bankers versus truck drivers, or violists versus engineers?

It would be wrong to reduce a complex question down to any of these over-simplified explanations, but when you see it done, you can tell the bias of the author. If someone studies dietary factors associated with Alzheimer’s disease, the choice of which factors they chose to study reveals his bias. What’s more, even though all of those issues may be relevant to the problem, when the evidence is presented in such a reductionist and absolute way, we quickly lose perspective of how important the issue truly is. So if only 51% of college-educated whites voted for Clinton, why present that particular fact (out of context and without a measure of the magnitude of effect)? The truth would appear to be that a college education was not really relevant to the election outcome. How much does eating hot dogs increase my chance of GI cancer? I’m not doubting that it does, but if the magnitude of effect is as little as it is, I will continue eating hot dogs and not worry about it.

We are curious and we all want to know the mechanisms of the diseases we treat or why people vote the way they do, etc. Yet, we must be careful not to too quickly fill in details that we don’t really understand. Not everything has to be understood to be known. When we reduce down complex problems, we almost always create misconceptions, and these misconceptions may impede progress and understanding of what is actually a complex issue. One of the my favorite medical examples: meconium aspiration syndrome (MAS). There is a comfort for the physician in thinking of MAS as a disease caused by the aspiration of meconium during birth; but the reality is that the meconium likely has little to nothing to do with the often fatal variety of MAS. We oversimplified a complex problem, but the false idea has been incredibly persistent. We spent decades doing non-evidence based interventions like amnioinfusion or deep suctioning at the perineum because it made sense. It made sense because we reduced a complex problem down to simple elements (that were, as it turns out, wrong or at least incomplete). Other examples include the cholesterol hypothesis of heart disease or the mechanical theories of cervical insufficiency, etc.

The quest for absolute certainty is an immature, if not infantile, trait of thinking. ― Herbert Feigl

The truth is that the pollsters’ main sin was over-representing their levels of confidence. Different pollsters looks at the same data in remarkably different ways. Out of 61 tracking polls going into the election, only one (the L.A. Times/USC poll) gave Trump a lead. Those individual polls, it turns out, over-sampled minority votes (or under-sampled white voters, depending upon your bias). Why did this incorrect sampling happen? Bias. Pollsters were over-sold on the narrative of the changing demographics of America. Some have admitted that they felt it was impossible for any Republican to win ever again because of this. So they made sampling assumptions in the polls that were biased to produce that result (I am not saying this was done consciously – they honestly believed that what they were doing was correct).

Here’s the lesson: We make serious mistakes in science and in decision making when we pick the outcome before we collect (or analyze) the data. We must let the facts lead to a conclusion, not our conclusion lead to the facts. This principle is true for every scientific experiment ever conducted. The pollsters really didn’t know what percentage of whites and non-whites would vote in this election (this was much easier in the 2012 election which was rhetorically similar to the 2008 election). But rather than express this uncertainty in reporting the data, the pollsters published confidence intervals and margins of error that were too narrow than the data deserved (and this over-selling of confidence is promoted by a sense of competition with other pollsters, just like scientific investigators in every field seek to one-up their colleagues).

This already biased polling data was then used by outfits like fivethirtyeight.com to run through all the scenarios and make a projection of the likely outcome of the race. A group at Princeton reported a 99% chance that Clinton would win. Fivethirtyeight gave Clinton the lowest pre-election probability of winning at 71.4%. In other words, the algorithms used by fivethirtyeight and Princeton and others looked at basically the same data and, by making different philosophical assumptions, used the same probability science to estimate the chance of the two candidates winning and came up with wildly different predictions from one another (from a low of 71.4% to a high of a 99% chance of a Clinton victory). These models of reality were all vastly different than actual reality (a 0% chance of a Clinton victory).

But if we look closer at both how much the polls changed throughout the season and how much prognosticators changed their predictions throughout the season, then what we note is very wide variation. This amount of variation alone can be statistically tied to the level of uncertainty in the data, which is a product of both the pollsters’ misassumptions about sampling and the pundits’ overconfidence in that data (and some poor statistical methodology). Error begets error. Nassim Taleb, author of Black Swan, Antifragile, and Fooled By Randomness, consistently criticized the statistical methodologies of fivethirtyeight and other outlets throughout the last year. If you are interested in some statistical heavy-lifting, read his excellent analysis here.

What the pundits should have said was, “I don’t know who has the advantage” and that would have been closer to the truth (this was Taleb’s own position). In other words, when we communicate scientific knowledge, it is important to report both the magnitude of effect of the observation and the probability that the “fact” is true (our confidence level). This underscores again the importance of epistemology (and I would argue Bayesian probabilistic reasoning). One of the basic tenets of Bayesian inference is that when no good data is available to estimate the probability of an event, then we just assume that the event is as likely to occur as to not occur (50/50 chance). This intuitive estimate, as it turns out, was much closer to the election outcome than any pundit’s prediction. But we didn’t know what we didn’t know because people are bad at saying the words, “I don’t know.” A side note is that very often our intuition is actually better than shoddy attempts at explaining things “scientifically.” This theme is consistent in Taleb’s writings. For example, most people unexposed to polling results (which tend to bias their opinions) would have stated that they were not sure who would win. That was a better answer than was Nate Silver’s at the fivethirtyeight blog. Intuition (System 1 thinking) is a powerful tool when it is fed with good data and is mitigated for bias (but, yes, that’s the hard part).

Nassim Taleb is less subtle in his criticisms of Scientism and what he calls the IYI class (intellectual yet idiot):

With psychology papers replicating less than 40%, dietary advice reversing after 30 years of fatphobia, macroeconomic analysis working worse than astrology, the appointment of Bernanke who was less than clueless of the risks, and pharmaceutical trials replicating at best only 1/3 of the time, people are perfectly entitled to rely on their own ancestral instinct and listen to their grandmothers (or Montaigne and such filtered classical knowledge) with a better track record than these policymaking goons.

Indeed one can see that these academico-bureaucrats who feel entitled to run our lives aren’t even rigorous, whether in medical statistics or policymaking. They can’t tell science from scientism — in fact in their eyes scientism looks more scientific than real science.

Taleb has written at length about how poor statistical science affects policy-making (mostly in the economic realm). But we see this in medical science as well. Do sequential compression devices used at the time of cesarean delivery reduce the risk of pulmonary embolism? No. But they are almost universally used in hospitals across America and have become “policy.” Such policies are hard to reverse. In medicine, we create de facto standards of care based on low-confidence, poor evidence. Scientific studies should be required to estimate the level of confidence that the hypothesis under consideration is true (and this is completely different than what a P-value tells us), as well as the magnitude of the effect observed or level of importance. Just because something is likely to be true doesn’t mean it’s important.

Consequentialism is a moral hindrance to knowledge.

Science has long been hindered by dogma. Most great innovations come from those who have been unencumbered by the weight of preconceptions. By age 24, Newton had conceptualized gravity and discovered the calculus. Semmelweis had discovered that hand-washing could prevent puerperal sepsis by age 29. Faraday had invented the electric motor by age 24. Thomas Jefferson wrote the Declaration of Independence at age 33. Why so young? Because these men and dozens of others had not already decided the limitations of their knowledge. They did not suffer from the ‘persistence of ideas’ bias. They did not know what was possible (or impossible). They looked at the world differently than their older contemporaries because they had not already learned ‘the way it was.’ Therefore, they had less motivation to skew the data they observed into a prespecified direction or to make the facts fit a narrative. Again, they were more likely to abide by the howardism, We must let the facts lead to a conclusion, not our conclusion lead to the facts.

Much of pesudo-science starts with someone stating, “I know I’m right, now I just have to prove it!” This opens the door to often inextricable bias. I believe this is a form of consequentialism.

Consequentialism is a moral philosophy best explained with the aphorism, “The ends justifies the means.” In various contexts, the idea is that the desired end (which is presumably good) justifies whatever actions are needed to achieve that end along the way. Actions that might normally be considered unethical (at least from a deontological perspective) are justifiable because the end-goal is worth it. Of course, this presumes two things: that the end really is worth it, and that the impact of all the otherwise unethical actions along the way don’t outweigh the supposed good of the outcome. Such pseudo-Machiavellian philosophy is really just an excuse to justify human depravity (Machiavelli never said the ends justifies the means). Put another way, if you can’t get to a good outcome by using moral actions, the outcome is probably not actually good.

What does this have to do with the election and science? Simply this: when a scientist (or pollster, politician, journalist, or you) has predetermined the outcome or the conclusion you believe should be, then all the data along the way is skewed according to this bias. You are subject to overwhelming cognitive bias. You have fallen prey to Jaynes’ mind projection fallacy. Real science lets the data speak for itself. But most science, as practiced, conforms data to fit a predetermined narrative (the narrative fallacy). However, what we think is the right outcome or conclusion, determined before the data is collected, may or may not be correct. True open-mindedness is not having a preconception about what should be, what is possible or impossible, what is or has been or will be, but only a desire to discover truth and knowledge, regardless of where it might lead.

Just as pundits and pollsters were blind to the reality of a looming Trump victory (and practically inconsolable at the surprise that they had been so wrong), so too do medical scientists conduct studies which are designed with enough implicit bias to tweak the data to fit their agendas. Scientists should seek to disprove their hypothesis, not prove it. There is a reason why the triple blinded, placebo-controlled, randomized trial is the gold standard (though not infallible) – to remove bias. The idea that we should seek to disprove what we believe is not just an axiom – it’s foundational to properly conduct experiments. Be on the lookout for people who pick winners first (people or ideas) and then manipulate the game (rules or data) to make it happen.

Published Date : September 14, 2016

Categories : Evidence Based Medicine

The financial consequences of poor prescribing habits, as detailed in Which Drug Should I Prescribe?, are simply enormous. Recall that just one physician, making poor prescribing decisions for only 500 patients, could unnecessarily add nearly a quarter of a billion dollars in cost to the healthcare system in just 10 years. In the case of prescribing habits, physicians personally gain from their poor choices only by receiving adoration from drug reps along with free lunches and dinners. But what about other treatment decisions? How much cost is added to the system when physicians deviate from evidence-based practice guidelines, and is there a financial benefit to them for doing so?

Let’s look at a practical example.

Imagine a small Ob/Gyn practice with four providers. The four providers see 1,000 young women a year between the ages of 17-20 who desire birth control (that is, each physician sees 250 of these patients). The evidence-based approach to treating these patients would be to give them a long-acting reversible contraceptive (LARC) and to not do a pap smear (of course, they should still have appropriate screenings for mental health, substance abuse, etc. as well as testing for gonorrhea and chlamydia and other interventions as needed).

If each of these women presented initially at age 17 or 18 desiring birth control and received a three-year birth control device (e.g, Nexplanon or Skyla), then it is likely that a majority of them would not return for yearly visits until the three year life of the birth control had passed. It is also likely that only 1-3 of the 1000 women would become pregnant over the three year period while using a LARC. Gynecologists for decades have tied the pap smear to the yearly female visit. As a consequence, most young women, when they learn that they don’t need a pap every year, don’t come back for a visit unless they need birth control or have some problem.

The most common need, of course, is birth control. But when women have a LARC and know that they don’t need a yearly pap smear, they are even less likely to return just for a screening visit. Admittedly, gynecologists need to do a better job of “adding value” to the yearly visit and focus on things that truly improve the quality of life for patients – like mental health and substance abuse counseling – but these things take time and it’s much quicker just do an unnecessary physical exam and move on. Physicians use the need for a birth control prescription – and the lie that they need a pap smear in order to get a prescription for birth control – in order to force women back to the office each year. What’s worse, many young women don’t go to a gynecologist in the first place for birth control because they do dread having a pelvic exam; they then become unintentionally pregnant after relying on condoms or coitus interruptus.

In the following example, the evidence-based (EB) physician sees an 18-year-old patient who desires birth control; he charges her for a birth control counseling visit and charges her for the insertion of a Nexplanon, which will last three years and provides extraordinary efficacy against pregnancy. He sees her each year afterwards for screening for STDs and other age-appropriate counseling, but he does not do a pap smear until she turns 21.

The non-EB physician sees the same 18-year-old patient who desires birth control and prescribes a brand-name birth control pill (at an average cost of $120 per month and with an 8.4% failure rate). He insists that she first have a pap smear since she is sexually active and he plans to continue doing pap smears each year when she returns for a birth control refill. He tells her scary stories about a patient he remembers her age who was having sex and got cervical cancer but thankfully he caught it with a pap smear (of course, that patient didn’t have cervical caner – she had dysplasia – but these little white lies are common in medicine). He doesn’t adequately screen her for mood disorders, substance abuse, or other issues relevant to her age group, but he does make a lot of money off of her; and he could care less about the psychological harm he might have caused her with his scary stories.

About 30% of women in this age group will have an abnormal pap smear if tested, and about 13.5% would have a pap smear showing LSIL, HSIL, or ASCUS with positive high risk HPV. The non-EBM physician would then do colposcopies on these 13.5% of women, along with short interval repeat pap smears (e.g, every 4-6 months) on the women with abnormal pap smears. About 15% of the women who receive colposcopy will have a biopsy with a moderate or severe dysplastic lesion, and the non-EBM physician will then do an unnecessary and potentially sterilizing LEEP procedure on those patients.

Since he gave these women birth control pills, 84 of the 1,000 patients will become pregnant each year, and this will lead to significant costs for caring for these pregnant women and delivering these babies. In fact, 252 of the 1000 women will become pregnant over the next three years (compared to 1-3 women who received a LARC). Ironically, the very reason why the patients came to the doctor in the first place is the most neglected, with nearly 1 in 4 becoming pregnant because of the poor decisions of the non-EB physician. The chart below shows the financial repercussions for the first year of care for these patients and for three years of care (since that is the useful life of a Nexplanon or Skyla LARC):

The differences are dramatic: the evidence-based physician has perhaps 3 pregnancies per 1000 patients and no issues with pap smears since none were done. I have no doubt that many of the women returned dissatisfied with the Nexplanon and some likely discontinued and switched methods, and I have allowed for return visits each year. A greater number of women who received birth control pills will return with problems and a desire to switch. But over 3 years, 252 of the women who were given birth control pills will become pregnant.

These young women came to get birth control and were instead victimized. The effects of unintended pregnancy on young women are profound. Women who have children under age 18 are nearly twice as likely to never graduate high school, nearly two-thirds live in poverty and receive government assistance, three-quarters never receive child support, and their children grow up underperforming in school. These are dramatic societal costs that must be considered a consequence of physicians ignoring evidence-based guidelines.

So what about the money? The physician who follows evidence-based guidelines will collect about $350,000 over the three year period; whereas the physician who does not will collect nearly $1.3 million in the same time. The physicians are financially incentivized to hurt young women. These extra monetary gains for the physician cost our healthcare system nearly 6.3 million dollars more. This story can be repeated for a variety of other medical problems in every speciality.

Another Ob/Gyn example: a physician who treats abnormal uterine bleeding with a hysterectomy when a lesser alternative might have sufficed (such as a Mirena IUD, birth control pills, or an endometrial ablation). The physician is financially incentivized to perform a hysterectomy because her fee is higher for that service compared to other, more appropriate services; but in order for the physician to make a few hundred dollars more, the healthcare system is drained of many thousands of dollars (the hospital charges for a hysterectomy can be enormous) and the patient is exposed to a higher risk procedure when a lower risk intervention was likely to work just as well.

The current model of financially incentivizing physicians to provide as much care as possible (what is called fee for service) is bankrupting our healthcare system and harming patients with too many interventions, too many prescriptions, and too much care. No good alternative to this system has been proposed. But fee for service provides no incentive for physicians to choose lower cost treatments, to prescribe less expensive drugs, or to perform expensive interventions only when truly needed. No good alternative to fee for service has been proposed, but every important player in healthcare realizes that the model needs to be changed in order to improve the quality of care and reduce costs.

The financial services industry faces a similar crisis. Currently, most financial advisors make money off of selling products, collecting fees for the transactions they conduct and the financial products that they sell, and, in some cases, residual fees from future earnings. This incentivizes advisors to sell products (stocks, bonds, equities, etc.) that produce the largest fees or the biggest return for them, not for the client. New federal rules are attempting to address this problem by requiring financial advisors to comply with fiduciary standards, meaning that they must put the clients’ needs above their own. How is this incentivized? A fiduciary advisor makes more money only if the client does; the fiduciary rules outlaw transactional fee (like selling an investment product) and instead tie the advisor’s fees to future client earnings. Full disclosure of conflicts of interest are also required. Essentially, the financial services industry is transitioning from a fee for service model (how many investment products can I sell?) to a fee for better outcomes model (I’ll only make more money if my client does).

In theory, physicians should comply with fiduciary rules for patient care because of our professional oath; doing what is best for patients without exploiting them is the most essential ethic of any medical professional. But professionalism among medical doctors is a true rarity, and the proof of this is the vast majority of physicians who choose the unethical approach of rejecting evidence-based guidelines and instead exploiting patients for financial gain.

Now I’m not suggesting that most physicians who don’t follow evidence-based guidelines do so because they have consciously chosen to financially exploit the patient, but in the example above of a 1,000 patients in a four physician group, if those physicians choose to follow evidence-based guidelines, they would likely not need the fourth physician and they wouldn’t be able to pay her. The finances of medical practice dictate far too many decisions, and sometimes this influence is subconscious (though many times it is not). Physicians are smart, and they are too good at couching their decisions to over-utilize interventions as if it’s a benefit to those patients: it’s never because physicians want to make more money doing it, it’s always because they are doing what’s best for patients. But the example above, which is typical for similar issues in many specialities, shows that outcomes are not better and the cost of not following evidence-based guidelines are extraordinary.

Physicians need to be financially incentivized to follow ethical, fiduciary principles. The Hippocratic Oath isn’t cutting it. Financial services advisors under fiduciary guidelines are paid more only if the client does well, and so too doctors should make more money only if their patients do well. In a $55 visit, I can choose to prescribe an inexpensive generic drug for a problem (at a cost of $48 a year) or I can choose to prescribe an expensive branded drug (at a cost of $4200 a year). Insurance companies need to recognize this and reward the physician who makes good decisions. It is much better to pay the physician another $100 to have the time to talk to the patient and educate her than it is to pay $4200 a year for the poor decision. Why not pay physicians as much for IUD insertions as they are payed for hysterectomies? Yes, I am quite serious. You would see so many IUDs being inserted that it would make your head spin, but the cost of healthcare overall would drop dramatically and patient outcomes would improve. High school graduation rates would increase. Poverty would decrease. Paychecks would go up as less money was spent on health insurance premiums. Why not pay more for vaginal deliveries than cesarean deliveries? In general, paying more for the good care that physicians provide and less for the bad care physicians provide is the solution. But what is good care and what is bad care? That’s the struggle.

I suggest three things.

A physician who does 10 hysterectomies per year should, in most cases, be paid more than a physician who does 100 hysterectomies per year. The number of unnecessary surgeries and interventions in all disciplines of medicine is staggering, and it results in high-cost, low-quality healthcare that is bankrupting the US system. I wish that the Hippocratic Oath were enough and that physicians always did what was best for their patients even if it meant making less money, but this wish is a pipe dream. We need appropriate financial incentives.

Published Date : August 30, 2016

Categories : Cognitive Bias, Evidence Based Medicine

Published Date : August 29, 2016

Categories : Evidence Based Medicine

Once the diagnosis is made correctly, the treatments begin. Selection of prescription drugs is one of the important (and costly) things that physicians do. How do we decide which drugs to use? Or, a better question, How should we decide?

First, let’s consider a patient who represents an almost typical case these days: a diabetic who has neuropathy, hypertension, hyperlipidemia, GERD, hypothyroidism, bipolar depression, and an overactive bladder. Even if this might not represent the problem list of your typical patient, it likely represents the problem list of any two patients (the average American between ages 19 and 64 takes 12 prescription drugs per years – many of which are unnecessary).

For each issue on the problem list, let’s select two common treatments. Doctor A tries to use lower cost drugs and generics when available. Doctor B favors newer drugs and believes that he is providing world-class medicine (at least that’s what the drug reps keep telling him). Let’s see what it costs Doctor A and Doctor B to treat this patient each year. Also, we’ll see what the ten year cost of treatment is and then magnify that times 500 patients (to represent a typical treatment panel of patients that a primary care doctor might have).

The difference in the two approaches is dramatic: Doctor B has spent nearly a 1/4 billion dollars more than Doctor A in just 10 years on just 500 patients. Add to this the increased costs of poorly selected short-term medications (like choosing Fondaparinux at $586/day versus Lovenox at $9.25/day, or choosing Benicar at $251/day rather than Cozaar at $8/day), unnecessarily prescribed medications (like antibiotics for earaches, sore throats, and sinus infections), unnecessary tests (like CT scans for headaches or imaging for low back pain), and the cost differences between Doctor A and Doctor B soar, all based upon decisions that the two doctors make in different ways.

In a career, Doctor B may cost the healthcare system easily $1 billion more than Doctor A. The cost of prescription drugs (and more importantly, the way doctors decide to use them) more than explains the run-away costs and low value of US healthcare. The top 100 brand-name prescription drugs in the US (by dollar amounts) netted $194 billion in sales a year as of 2015 (plus pharmacy mark-ups, etc.). This list of drugs includes Lyrica, Januvia, Dexilant, Benicar, Victoza, Synthroid, and Abilify (all mentioned above) plus Crestor (it recently went generic so I have substituted the similarly priced, non-generic Livalo), Vesicare (similarly priced to the Enablex listed above), and Pristiq (which is actually more expense than the Viibryd I used on the list – Viibryd is one of the top 100 prescribed branded drugs, just not a top 100 money maker). So Doctor B’s choice of drugs is representative for the choices many doctors are making in the US.

Now in fairness, the most prescribed drugs by number of prescriptions are generics, but because they are so inexpensive, they do not make the money list. Doctor A’s choice of Metformin, Levothyroxine, and Omeprazole (generic Prilosec) are all among the Top 10 most prescribed generics. Unfortunately, the most prescribed drug of all (with 123 million prescriptions in 2015) was generic Lortab.

So who’s right, Dr. A or Dr. B? Let’s go through a process to determine what drug we should prescribe.

Is a drug treatment necessary?

Just because a patient has a diagnosis doesn’t mean she necessarily needs a drug to match it. Physicians tend to overestimate the benefits of most treatments and do a poor job of conveying the potential benefits (and risks) to patients. Non-medical treatments (like lifestyle changes, counseling, etc.) are undervalued and perceived as less effective or just too much work. Providers often feel like they have to write a prescription or they will have an unsatisfied patient (in other words, they feel that if a patient presents with a complaint, it must be addressed with an intervention rather than just education and reassurance). Even worse, many clinicians believe that if they prescribe a medication during a visit, they can bill for a higher level visit, so they feel financially rewarded for prescribing. For example,

In general, overuse of prescription medications happens for the following reasons:

Once it is decided that a drug is necessary for treatment, the next question is, Which one?

Which drug is appropriate?

In order to determine this, we must first decide what the goal of treatment is in order to select a medication that satisfies that goal. This sounds obvious, but it isn’t always done appropriately. The next part is trickier. In general, we need to select the lowest-cost medication that fulfills this treatment goal. Dr. A has done this in the above example quite well. Dr. B would rationalize his choices by adding that he has also picked either the most effective drug and/or the one with the least side effects. This rationale sounds attractive, and drug companies prey on the desire of physicians to use the best drug with the fewest side effects (and the ego-boosting idea that they are being innovative). But it is the wrong strategy. Here’s why.

Let’s say that Drug A is 90% effective at treating the desired condition and carries with it a 5% risk of an undesirable side effect. Meanwhile, Drug B is 95% effective at treating the condition and carries only a 2.5% risk of the side effect. Drug B is then marketed as having half the number of treatment failures as Drug A and half the number of side effects as Drug A. Drug A costs $4 while Drug B costs $350. So which drug should we use? If 100 people use Drug B, then approximately 92 will be treated successfully without the side effect at a cost of $386,400 per year. Eight people will remain untreated. If 100 people use Drug A, then approximately 85 people will be treated successfully without the side effect at a cost of $4,080 per year. After failing Drug A, an additional 7 people will use Drug B successfully at a cost of $29,400 per year, with 8 people still untreated. This try-and-fail or stepwise approach, rather than the “Gold Standard” approach, treated just as many people successfully but saved $352,920 per 100 people per year.

This scenario assumes that such dramatic differences between the two drugs even exist. The truth is that this is rarely the case. Such dramatic differences in side effects and efficacy do not exist for the drugs listed in the scenario above. What’s worse, in many cases the more expensive drug is actually inferior. HCTZ has long had superior mortality benefit data compared to ACE-Is and ARBs, but due to clever marketing, has never been utilized as much as it should be. Metformin is simply one of the best (and cheapest) drugs for diabetes, yet no one markets it and there are no samples in the supply closet. Fondaparinux is an important drug for the rare person who has heparin-induced thrombocytopenia (HIT), but the incidence of HIT among users of prophylactic Lovenox is less than 1/1000, hardly justifying the 63-fold increased price of fondaparinux. The clinical differences between Cozaar and Benicar can hardly begin to justify the 31-fold price difference. To decide by policy that every patient with overactive bladder should receive Vesicare ($300), Detrol LA ($320), or Enablex ($350) as a first line drug when most patients are satisfied with Oxybutynin ($4) is the attitude that is bankrupting US healthcare. In some cases, physicians prescribe the exact same drug at a costlier price (e.g. Sarafem for $486 instead of the chemically identical fluoxetine for $6).

A word of caution about interpreting drug comparison trials: drug companies have ten of billions of dollars at stake when marketing new drugs. The trials that are quoted to you are produced and funded by those drug companies. They only publish the studies that show positive results. Drug studies are, by far, the most biased of all publications so be incredibly careful in believing the bottom line from the studies. Here’s a howardism,

If the drug were really that good, it wouldn’t need to be marketed so aggressively.

Even when one drug is substantially better than another, it still may not be appropriate to prescribe it. Many times, the prohibitive cost of the perceived “better” drug prevents patients from actually getting and maintaining use of the drug, leading to significantly poorer outcomes than would have existed with the “inferior” drug. The best drug is the one the patient can afford to take.

Thus, pick the drug that is designed to achieve the desired goal that is least expensive (or at least pick a drug that is available as a generic), then specifically decide why that drug is not an option before picking a more expensive one (e.g. the patient is allergic to the drug, the patient previously failed the drug, etc.). (Wonder how much drugs cost? All the prices here are taken from goodrx.com).

What influences determine the drugs that clinicians prescribe?

There are two facts about drug company marketing. The first is the physicians do not believe that they are influenced by drug company advertisements, representatives, free lunches and dinners, pens, sampling, CME-events, etc. The second is that drug company marketing is the number one influence on clinicians’ prescribing habits. In 2012, US drug companies spent $3 billion marketing to consumers while spending $24 billion marketing directly to doctors. They spend that money because they know it yields returns. Remember that just one Dr. B is worth nearly 1/4 of a billion dollars in a ten year period to pharmaceutical companies. They are more than happy to buy as many meals as it takes. But doesn’t this money support research? It’s true that most drug companies spend billions per year on research and development, but the total R&D expenditures are usually about half of marketing expenses alone, and obviously a fraction of the $350+ billion revenues. But don’t samples help me take care of my poor patients? No. They help influence your prescribing habits and they give you the impression that the drug is a highly desirable miracle that would be wonderful to give away to the needy because it’s just that good. What can help you take care of your patients are $4 generic drugs. Read more about the financials of the industry and the rising costs of drugs here.

These problems would be much worse than they are if insurance companies (“the evil insurance companies”) didn’t have prescribing tiers that force patients to ask their doctors for cheaper alternatives and if they didn’t deny coverage entirely for many brand-name drugs. But it is still an enormous problem, resulting in hundreds of billions of dollars of excess cost to the US healthcare system each year.

Simply by being more like Dr. A and less like Dr. B, physicians could rapidly and dramatically reduce the cost of US healthcare.

Published Date : August 25, 2016

Categories : Cognitive Bias

All men make mistakes, but a good man yields when he knows his course is wrong, and repairs the evil. The only crime is pride.

— Sophocles, Antigone

(Thanks to my friend Michelle Tanner, MD who contributed immensely to this article).

In the post Cognitive Bias, we went over a list of cognitives biases that may affect our clinical decisions. There are many more, and sometimes these biases are given different names. Rather than use the word bias, many authors, including the thought-leader in this field, Pat Croskerry, prefer the term cognitive dispositions to respond (CDR) to describe many situations where clinicians’ cognitive processes might be distorted, including the use of inappropriate heuristics, cognitive biases, logical fallacies, and other mental errors. The term CDR is thought to carry less of a negative connotation, and indeed, physicians have been resistant to interventions aimed at increasing awareness of and reducing errors due to cognitive biases.